Recent Works:

- NAACL 2025 - HMT: Hierarchical Memory Transformer for Efficient Long Context Language Processing

- FCCM 2025 - InTAR: Inter-Task Auto-Reconfigurable Accelerator Design for High Data Volume Variation in DNNs

- ICLR 2025 - Optimized Multi-token Joint Decoding with Auxiliary Model for LLM Inference

- AAAI 2025 - Dynamic Width Speculative Beam Decoding for Efficient LLM Inference

Software:

Collaborators:

- Zongyue Qin

- Maryam Haghifam

- Yizhou Sun

Prior Works:

- Caffeine: Towards Uniformed Representation and Acceleration for Deep Convolutional Neural Networks

- Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks

- Automated Systolic Array Architecture Synthesis for High Throughput CNN Inference on FPGAs

- CLINK: Compact LSTM Inference Kernel for Energy Efficient Neurofeedback Devices

- End-to-End Optimization of Deep Learning Applications

- StreamGCN: Accelerating Graph Convolutional Networks with Streaming Processing

- FlexCNN: An End-to-End Framework for Composing CNN Accelerators on FPGA

For Interested Students:

Please first check the recent works and see if they align with your interests, and provide some thoughts regarding the ongoing follow-up works when requesting for research opportunities. The prior works are currently not maintained.

Summary

In this project, we focus on improving the efficiency of large and small language models and potentially extend to general deep neural networks for other applications. In terms of efficiency, we believe there are three key metrics to pay attention to:

- Generation Quality: How well an LLM or SLM can generate texts based on the prompts, using quantitative metrics (e.g., RougeL, F1, EM score, etc.) or learning-based evaluators (e.g., Reward models)?

- Speed: How fast an LLM or SLM can generate tokens (e.g., time to first token (TTFT), time per output token (TPOT), throughput (tokens/s))?

- Energy/Cost efficiency: How many joules/dollars does one need to spend to get one output token?

To improve these three metrics, our lab approaches in two directions:

- Algorithm: Novel LLM architecture for efficient information compression and retrieval and speculative decoding to speedup token generation

- Hardware: Auto-reconfigurable accelerator design for flexible control and balance of memory access and computation efficiency

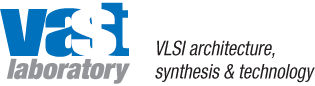

Novel LLM Architecture - Hierarchical Memory Transformer (HMT)

Lead: Zifan He

Transformer-based large language models (LLM) have been widely used in language processing applications. However, due to the memory constraints of the devices, most of them restrict the context window. Even though recurrent models in previous works can memorize past tokens to enable unlimited context and maintain effectiveness, they have "flat'' memory architectures. Such architectures have limitations in selecting and filtering information. Since humans are good at learning and self-adjustment, we believe that imitating brain's memory hierarchy is beneficial for model memorization. Thus, we propose the Hierarchical Memory Transformer (HMT), a novel framework that facilitates a model's long-context processing ability by imitating human memorization behavior. Leveraging memory-augmented segment-level recurrence, we organize the memory hierarchy by preserving tokens from early input segments, passing memory embeddings along the sequence, and recalling relevant information from history. Evaluating general language modeling, question-answering tasks, and the summarization task, we show that HMT consistently improves the long-context processing ability of existing models. Furthermore, HMT achieves a comparable or superior generation quality to long-context LLMs with 2-57x fewer parameters and 2.5-116x less inference memory, significantly outperforming previous memory-augmented models.

Ongoing Follow-up Works:

- Extend HMT with more memory hierarchy and exploit the physical memory hierarchy of the computing system

- Combine HMT with state-space models to detect crucial information when processing the input sequence.

- HMT & RAG: improve RAG system's efficiency using HMT

- HMT plugin on FPGA (Lead: Jiahao Zhang)

Speculative Decoding for Efficient LLM Inference

Lead: Zongyue Qin

Large language models (LLMs) have achieved remarkable success across diverse tasks, yet their inference processes are hindered by substantial time and energy demands due to single-token generation at each decoding step. While previous methods, such as speculative decoding, mitigate these inefficiencies by producing multiple tokens per step, each token is still generated by its single-token distribution, thereby enhancing speed without improving effectiveness. In contrast, our work simultaneously enhances inference speed and improves the output effectiveness. We consider multi-token joint decoding (MTJD), which generates multiple tokens from their joint distribution at each iteration, theoretically reducing perplexity and enhancing task performance. However, MTJD suffers from the high cost of sampling from the joint distribution of multiple tokens. Inspired by speculative decoding, we introduce multi-token assisted decoding (MTAD), a novel framework designed to accelerate MTJD. MTAD leverages a smaller auxiliary model to approximate the joint distribution of a larger model, incorporating a verification mechanism that not only ensures the accuracy of this approximation but also improves the decoding efficiency over conventional speculative decoding. Theoretically, we demonstrate that MTAD closely approximates exact MTJD with bounded error. Empirical evaluations using Llama-2 and OPT models ranging from 13B to 70B parameters across various tasks reveal that MTAD reduces perplexity by 21.2% and improves downstream performance compared to standard single-token sampling. Furthermore, MTAD achieves a 1.42x speed-up and consumes 1.54x less energy than conventional speculative decoding methods. These results highlight MTAD's ability to make multi-token joint decoding both effective and efficient, promoting more sustainable and high-performance deployment of LLMs.

Large language models (LLMs) have shown outstanding performance across numerous real-world tasks. However, the autoregressive nature of these models makes the inference process slow and costly. Speculative decoding has emerged as a promising solution, leveraging a smaller auxiliary model to draft future tokens, which are then validated simultaneously by the larger model, achieving a speed-up of 1-2x. Although speculative decoding matches the same distribution as multinomial sampling, multinomial sampling itself is prone to suboptimal outputs, whereas beam sampling is widely recognized for producing higher-quality results by maintaining multiple candidate sequences at each step. This paper explores the novel integration of speculative decoding with beam sampling. However, there are four key challenges: (1) how to generate multiple sequences from the larger model's distribution given drafts sequences from the small model; (2) how to dynamically optimize the number of beams to balance efficiency and accuracy; (3) how to efficiently verify the multiple drafts in parallel; and (4) how to address the extra memory costs inherent in beam sampling. To address these challenges, we propose dynamic-width speculative beam decoding (DSBD). Specifically, we first introduce a novel draft and verification scheme that generates multiple sequences following the large model's distribution based on beam sampling trajectories from the small model. Then, we introduce an adaptive mechanism to dynamically tune the number of beams based on the context, optimizing efficiency and effectiveness. Besides, we extend tree-based parallel verification to handle multiple trees simultaneously, accelerating the verification process.

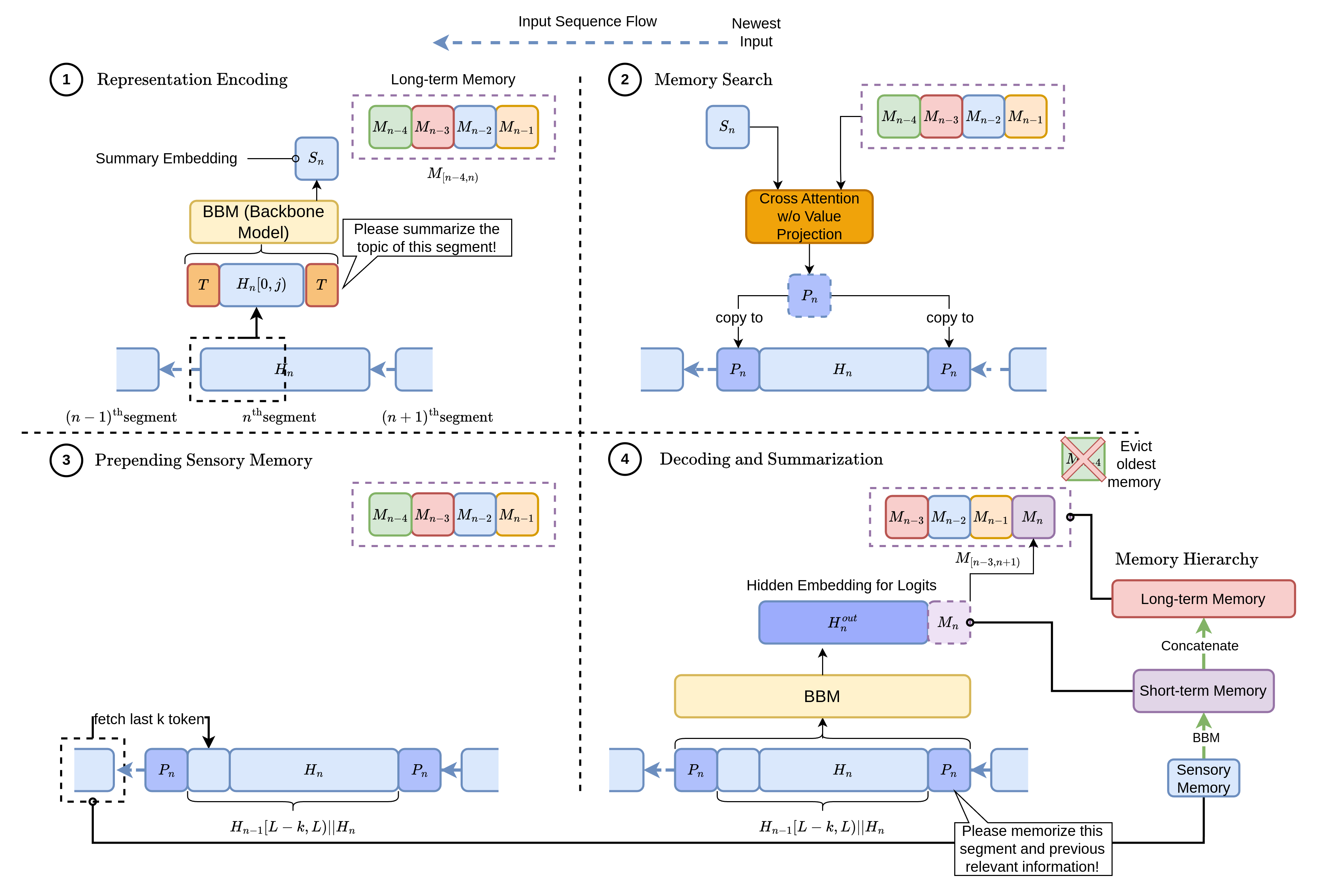

Hardware Design - Inter-Task Auto-Reconfigurable Accelerator (InTAR)

Lead: Zifan He

The rise of deep neural networks (DNNs) has driven an increased demand for computing power and memory. Modern DNNs exhibit high data volume variation (HDV) across tasks, which poses challenges for FPGA acceleration: conventional accelerators rely on fixed execution patterns (dataflow or sequential) that can lead to pipeline stalls or necessitate frequent off-chip memory accesses. To address these challenges, we introduce the Inter-Task Auto-Reconfigurable Accelerator (InTAR), a novel accelerator design methodology for HDV applications on FPGAs. InTAR combines the high computational efficiency of sequential execution with the reduced off-chip memory overhead of dataflow execution. It switches execution patterns automatically with a static schedule determined before circuit design based on resource constraints and problem sizes. Unlike previous reconfigurable accelerators, InTAR encodes reconfiguration schedules during circuit design, allowing model-specific optimizations that allocate only the necessary logic and interconnects. Thus, InTAR achieves a high clock frequency with fewer resources and low reconfiguration time. Furthermore, InTAR supports high-level tools such as HLS for fast design generation. We implement a set of multi-task HDV DNN kernels using InTAR. Compared with dataflow and sequential accelerators, InTAR exhibits 1.8x and 7.1x speedups, respectively. Moreover, we extend InTAR to GPT-2 medium as a more complex example, which is 3.65-39.14x faster and a 1.72-10.44x more DSP efficient than SoTA accelerators (Allo and DFX) on FPGAs. Additionally, this design demonstrates 1.66-7.17x better power efficiency than GPUs.

Ongoing Follow-up Works:

- Automatic compilation of InTAR accelerator design.

- Integrated scheduling, resource allocation, and floorplanning for InTAR design on GPUs and FPGAs.

Prior Works:

1. Caffeine: Towards Uniformed Representation and Acceleration for Deep Convolutional Neural Networks

With the recent advancement of multilayer convolutional neural networks (CNN), deep learning has achieved amazing success in many areas, especially in visual content understanding and classification. To improve the performance and energy-efficiency of the computation-demanding CNN, the FPGA-based acceleration emerges as one of the most attractive alternatives. We design and implement Caffeine, a hardware/software co-designed library to efficiently accelerate the entire CNN on FPGAs. First, we propose a uniformed convolutional matrix multiplication representation for both computation-intensive convolutional layers and communication-intensive fully connected (FCN) layers. Second, we design Caffeine with the goal to maximize the underlying FPGA computing and bandwidth resource utilization, with a key focus on the bandwidth optimization by the memory access reorganization not studied in prior work. Moreover, we implement Caffeine in the portable high-level synthesis and provide various hardware/software definable parameters for user configurations. Finally, we also integrate Caffeine into the industry-standard software deep learning framework Caffe.

2. Automatic Systolic Array Synthesis

Modern FPGAs are equipped with an enormous amount of resource. However, existing implementations have difficulty to fully leverage the computation power of the latest FPGAs. We implement CNN on an FPGA using a systolic array architecture, which can achieve high clock frequency under high resource utilization. We provide an analytical model for performance and resource utilization and develop an automatic design space exploration framework, as well as source-to-source code transformation from a C program to a CNN implementation using systolic array. The experimental results show that our framework is able to generate the accelerator for real-life CNN models, achieving up to 461 GFlops for floating point data type and 1.2 Tops for 8-16 bit fixed point.

The project above works on the systolic array synthesis for CNN. We are also working on improving the generability of the approach to map more general applications to systolic arrays. We present our ongoing compilation framework named PolySA which leverages the power of the polyhedral model to achieve the end-to-end compilation for systolic array architecture on FPGAs. PolySA is the first fully automated compilation framework for generating high-performance systolic array architectures on the FPGA leveraging recent advances in high-level synthesis. We demonstrate PolySA on two key applications—matrix multiplication and convolutional neural network. PolySA is able to generate optimal designs within one hour with performance comparable to state-of-the-art manual designs.

3. CLINK: Compact LSTM Inference Kernel for Energy Efficient Neurofeedback Devices

Neurofeedback device measures brain wave and generates feedback signal in real time and can be employed as treatments for various neurological diseases. Such devices require high energy efficiency because they need to be worn or surgically implanted into patients and support long battery life time. In this paper, we propose CLINK, a compact LSTM inference kernel, to achieve high energy efficient EEG signal processing for neurofeedback devices. The LSTM kernel can approximate conventional filtering functions while saving 84% computational operations. Based on this method, we propose energy efficient customizable circuits for realizing CLINK function. We demonstrated a 128-channel EEG processing engine on Zynq-7030 with 0.8 W, and the scaled up 2048-channel evaluation on VirtexVU9P shows that our design can achieve 215x and 7.9x energy efficiency compared to highly optimized implementations on E5- 2620 CPU and K80 GPU, respectively. We carried out the CLINK design in a 15-nm technology, and synthesis results show that it can achieve 272.8 pJ/inference energy efficiency, which further outperforms our design on the Virtex-VU9P by 99x.

4. FlexCNN: End-to-End Optimization of Deep Learning Applications

The irregularity of recent Convolutional Neural Network (CNN) models such as less data reuse and parallelism due to the extensive network pruning and simplification creates new challenges for FPGA acceleration. Furthermore, without proper optimization, there could be significant overheads when integrating FPGAs into existing machine learning frameworks like TensorFlow. Such a problem is mostly overlooked by previous studies. However, our study shows that a naive FPGA integration into TensorFlow could lead to up to 8.45x performance degradation. To address the challenges mentioned above, we propose several SW/HW co-design approaches to perform the end-to-end optimization of deep learning applications. We present a flexible and composable architecture called FlexCNN. It can deliver high computation efficiency for different types of convolution layers using techniques including dynamic tiling and data layout optimization. FlexCNN is further integrated into the TensorFlow framework with a fully-pipelined software-hardware integration flow. This alleviates the high overheads of TensorFlow-FPGA handshake and other non-CNN processing stages. We use OpenPose, a popular CNN-based application for human pose recognition, as a case study. Experimental results show that with the FlexCNN architecture optimizations, we can achieve 2.3x performance improvement. The pipelined integration stack leads to a further 5x speedup. Overall, the SW/HW co-optimization produces a speedup of 11.5x and results in an end-to-end performance of 23.8FPS for OpenPose with floating-point precision, which is the highest performance reported for this application on FPGA in the literature.

Please find the source code of FlexCNN at: https://github.com/UCLA-VAST/FlexCNN