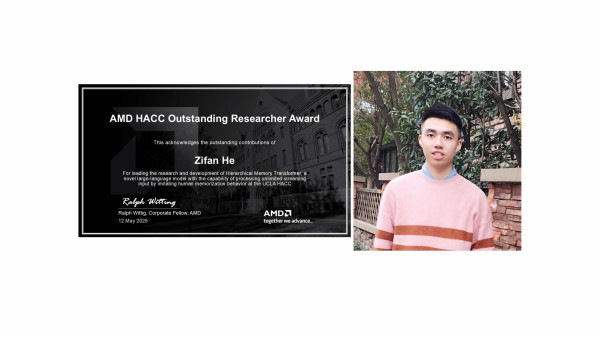

Congratulations to Zifan He for receiving the annual AMD Heterogeneous Accelerated Computing award. He is a second year PhD student working on algorithm-hardware co-design to enable efficient and high-quality inference of LLMs. His research includes (1) Efficient Language Processing with Unlimited Context Length: Zifan developed the Hierarchical Memory Transformer (HMT), a framework designed to improve memory efficiency in long-context scenarios. HMT segments input sequences, applies recurrent sequence compression, and retrieves compressed representations dynamically during inference. This plug-and-play framework achieves similar or better generation quality than existing long-context models while using 2-57x fewer parameters and 2.5-116x less memory during inference. These advantages make HMT especially well-suited for FPGA-based acceleration due to its reduced off-chip memory demands and efficient data movement patterns; (2) Novel Inference Accelerator Design: Recognizing that FPGAs offer more flexible and distributed on-chip memory, Zifan developed the Inter-Task Auto-Reconfigurable (InTAR) accelerator. This design enables resource repurposing of tasks under a static schedule, optimizing the trade-off between computation and memory access. Experiments on transformer models such as GPT-2 show that InTAR achieves speedups of 3.65- 39.14x, computational efficiency gains of 1.72-10.44x over prior FPGA accelerators, and 1.66-7.17x better power efficiency compared to GPUs. With 3 papers published at top-tier conferences in NLP/ML and FPGA design, Zifan has made impressive contributions that improve both computational efficiency and model performance in LLM inference.